Concurrency is the ability to do more than one thing at the same time.

Back in early days, computers could execute only one program at a time. But now, modern computers are capable of running a whole lot of tasks at the same time. For example -

You can browse my blog on a web browser and listen to music on a media player, at the same time.

You can edit a document on a word processor, while other applications can download files from the internet, at the same time.

Concurrency doesn’t necessarily involve multiple applications. Running multiple parts of a single application simultaneously is also termed as concurrency. For example -

A word processor formats the text and responds to keyboard events, at the same time.

An audio streaming application reads the audio from the network, decompresses it and updates the display, all at the same time.

A web server, which is essentially a program running on a computer, serves thousands of requests from all over the world, at the same time.

Softwares that are able to do more than one thing at a time are called concurrent software.

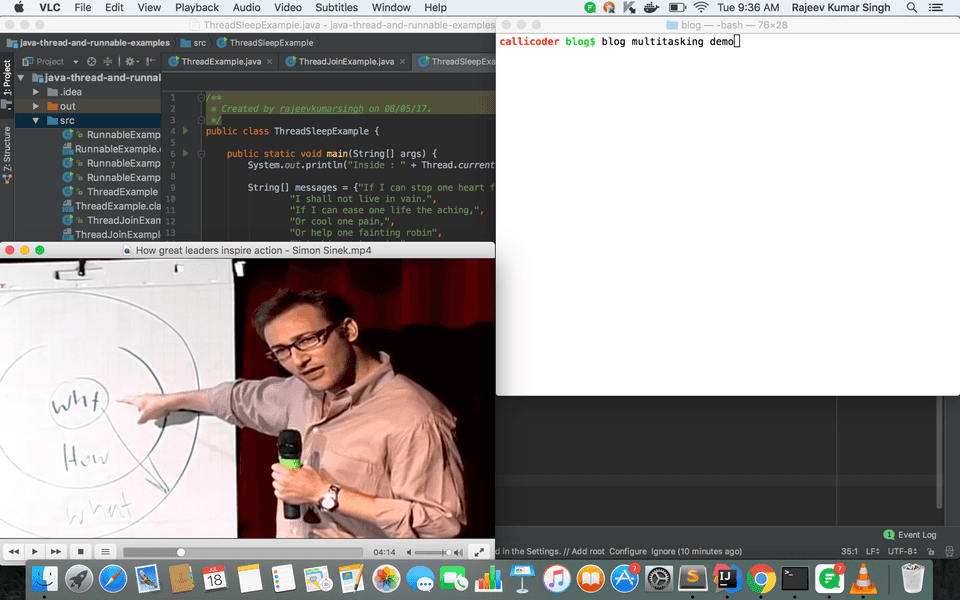

The following screenshot of my computer shows an example of concurrency. My computer system is doing multiple things simultaneously - It is running a video on a media player, accepting keyboard input on a terminal, and building a project in IntelliJ Idea.

Concurrency: Under the Hood

Ok! I understand that computers are able to run multiple tasks at a time, but how do they do it?

I know that computers, nowadays, come with multiple processors, but isn’t concurrency possible on a single processor system as well? Also, computers can execute way more tasks than the number of processors available.

How can multiple tasks execute at the same time even on a single CPU?

Well! It turns out, that, they don’t actually execute at the same physical instant. Concurrency doesn’t imply parallel execution.

When we say - “multiple tasks are executing at the same time”, what we actually mean is that “multiple tasks are making progress during the same period of time.”

The tasks are executed in an interleaved manner. The operating system switches between the tasks so frequently that it appears to the users that they are being executed at the same physical instant.

Therefore, Concurrency does not mean Parallelism. In fact, Parallelism is impossible on a single processor system.

Unit of Concurrency

Concurrency is a very broad term, and it can be used at various levels. For example -

Multiprocessing - Multiple Processors/CPUs executing concurrently. The unit of concurrency here is a CPU.

Multitasking - Multiple tasks/processes running concurrently on a single CPU. The operating system executes these tasks by switching between them very frequently. The unit of concurrency, in this case, is a Process.

Multithreading - Multiple parts of the same program running concurrently. In this case, we go a step further and divide the same program into multiple parts/threads and run those threads concurrently.

Processes and Threads

Let’s talk about the two basic units of concurrency : Processes and Threads.

Process

A Process is a program in execution. It has its own address space, a call stack, and link to any resources such as open files.

A computer system normally has multiple processes running at a time. The operating system keeps track of all these processes and facilitates their execution by sharing the processing time of the CPU among them.

Thread

A thread is a path of execution within a process. Every process has at least one thread - called the main thread. The main thread can create additional threads within the process.

Threads within a process share the process’s resources including memory and open files. However, every thread has its own call stack.

Since threads share the same address space of the process, creating new threads and communicating between them is more efficient.

Common Problems associated with Concurrency

Concurrency greatly improves the throughput of computers by increasing CPU utilization. But with great performance comes few issues -

Thread interference errors (Race Conditions) : Thread interference errors occur when multiple threads try to read and write a shared variable concurrently, and these read and write operations overlap in execution.

In this case, the final result depends on the order in which the reads and writes take place, which is unpredictable. This makes thread interference errors difficult to detect and fix. Thread interference errors can be avoided by ensuring that only one thread can access a shared resource at a time. This is usually done by acquiring a mutually exclusive lock before accessing any shared resource. The concept of acquiring a lock before accessing any shared resource can lead to other problems like **deadlock** and **starvation**. We'll learn about these problems and their solution in future tutorials.Memory consistency errors : Memory consistency errors occur when different threads have inconsistent views of the same data. This happens when one thread updates some shared data, but this update is not propagated to other threads, and they end up using the old data.

What’s next?

In this blog post, We learned the basics of concurrency, difference between concurrency and parallelism, different levels of concurrency and problems associated with concurrency.

Although, concurrency can be used at various levels, In this tutorial series, we’ll focus on concurrency at thread level. i.e. multithreading.

In the next blog post, we’ll learn how to create new threads and run some task inside those threads.

Thank you for reading my blog. Please ask any doubts in the comment section below.